Contents

Introduction

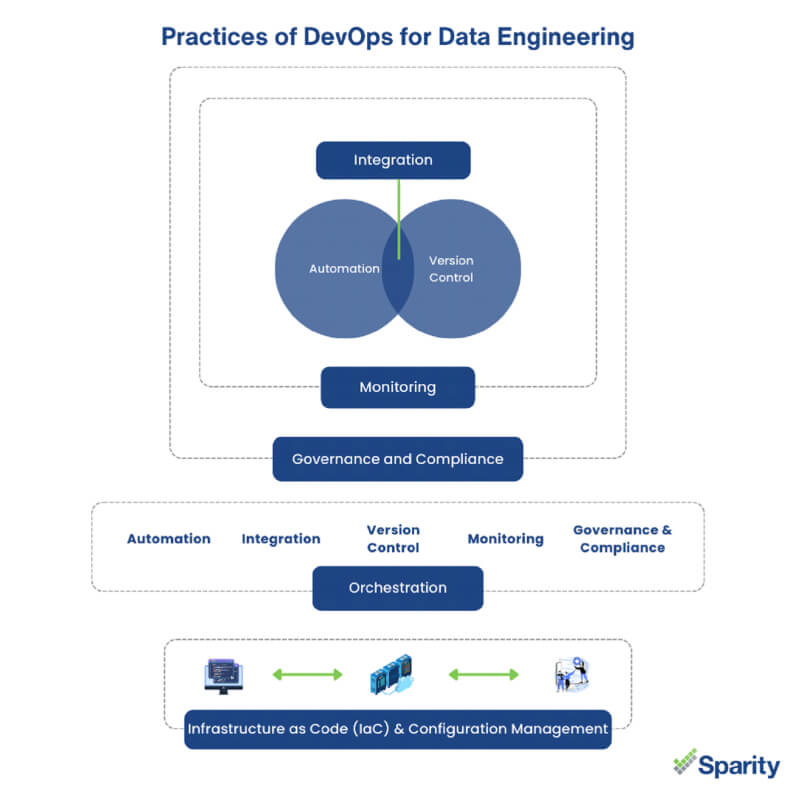

DevOps for data focuses on optimizing processes and accelerating delivery, mirroring the objectives of DevOps in software development and IT operations.

In this blog, we will delve into emphasizing the practices of DevOps for data engineering to enhance data engineering workflows, including aspects of data quality and governance. Just as it’s crucial to integrate applications and tools in the software industry, integrating services or technologies is equally imperative in the context of data operations.

Automation

Version control, also known as source control or revision control, is the management of changes to documents, files, or software projects. It enables multiple developers to work on the same project concurrently, keeping track of modifications, and providing a history of changes. This ensures that changes can be reviewed, reverted if necessary, and merged with other contributions. We have wide range of version control tools or softwares available.

Integration

Emphasizing on Integration which is a core in DevOps for Data revolves around integrating diverse software tools and processes to facilitate seamless data sharing and collaboration. Integrating databases, files, and APIs helps you consolidates data together in one place for comprehensive analysis.

For instance, Microsoft Fabric, a SaaS application, integrates Power BI, Azure Synapse, and Azure Data Factory for centralized administration and unified data lakes. Integration enhances interoperability, enabling efficient data management and analysis across platforms.

Version Control

Integrating Version control in data engineering functions as a safeguard, akin to a time machine, tracking changes in data pipelines and analysis code. This facilitates easy restoration of previous versions in case of errors.

For example, utilizing Git version control integrated with Power BI via Azure DevOps for data files such as PBIX or PBIT ensures traceability and facilitates collaborative development. Version control enhances reliability and reproducibility in data engineering workflows. Power BI currently supports integration with Git version control, aligning with Microsoft’s suite of tools and software offerings.

Monitoring

Monitoring, crucial in devops for data ensures the smooth operation and efficiency of data pipelines and analysis processes. Real-time monitoring of data patterns enables prompt identification and resolution of issues. By proactively monitoring data integrity and performance, potential problems are mitigated before they escalate. Effective monitoring optimizes resource utilization and enhances data reliability, ensuring uninterrupted data processing and analysis.

Governance and Compliance

Adherence to rules governing data privacy, security, and usage is imperative for DevOps for data teams. Compliance measures safeguard sensitive information against unauthorized access or misuse. With robust security features such as row-level security, data tools prioritize data protection. DevOps tools also incorporate security measures to uphold data governance standards, fostering trust and regulatory compliance

Orchestration

Orchestration, integral to DevOps for data strategies coordinates various components of data infrastructure and processing pipelines for efficient operation. Whether managing extensive data volumes or complex analytics tasks, orchestration ensures seamless execution according to schedule. Utilizing orchestration tools like Kubernetes and Docker optimizes resource allocation and enhances scalability, facilitating agile and efficient data processing.

Infrastructure as Code (IaC) & Configuration Management

IaC and configuration management enable consistent and automated management of data infrastructure and settings. By defining infrastructure configurations as code, deployments become repeatable and scalable. Leveraging IaC tools automates provisioning and management of data storage, compute clusters, and networking resources. Configuration management practices ensure standardization across data engineering environments, enhancing reliability and scalability. All this aligns with principles of DevOps for data engineering workflows.

Watch the Video to understand more about this

Benefits of Leveraging DevOps for Data Engineering

| Aspect | DevOps | DataOps |

|---|---|---|

| Focus | Software development and IT operations | Data integration, analytics, and data engineering |

| Core Objective | Accelerating software delivery and operations | Enhancing data quality, collaboration, and agility |

| Lifecycle | Entire software development lifecycle | Data lifecycle, from acquisition to analysis |

| Tools | CI/CD tools (e.g., Jenkins, GitLab) | Data integration tools (e.g., Apache NiFi, Talend) |

| Methodologies | Agile, continuous integration, continuous deployment | Iterative development, automated testing, continuous integration |

| Data-centricity | Less emphasis on data quality and governance | Focus on data quality, governance, and compliance |

| Automation | Automated deployment, monitoring, and testing | Automated data pipelines, cleansing, and validation |

| Collaboration | Collaboration between developers and operations | Collaboration among data engineers, analysts, scientists and business operation teams |

| Goal | Faster and more reliable software delivery | Efficient and scalable data processing and analysis |

| Metrics | Deployment frequency, lead time, MTTR | Data quality, time to insight, data processing time |

DevOps vs DataOps

What is DevOps?

DevOps combines software development and IT operations to streamline the software development lifecycle, emphasizing automation, collaboration, and continuous delivery.

What is DataOps?

DataOps focuses on improving data analytics by applying principles from Agile development and DevOps to enhance data quality, collaboration, and agility in data-related processes.

While DevOps focuses on software development and IT operations, DataOps concentrates on enhancing data quality, collaboration, and agility in data-related processes. Both aim to optimize processes and accelerate delivery but in different domains: software development for DevOps and data analytics for DataOps.

Conclusion

Optimizing workflows according to project requirements and available resources is paramount. By leveraging DevOps practices tailored to data engineering needs, organizations can enhance efficiency, collaboration, and data quality. Adapting workflows to project specifics ensures streamlined operations and maximized productivity, ultimately driving success in data-driven initiatives.

Why Sparity?

Sparity offers expertise in both data engineering and DevOps, adding significant value to your projects. Our expert team analyzes your requirements, provides tailored solutions, and manages the implementation process. Partner with us for seamless project execution and exceptional results.

FAQs

At this time, Microsoft Fabric and Power BI support Git in Azure DevOps. Git is a distributed version control system that provides a robust framework for managing source code and project files. Click here for the comprehensive guide.Read More

Microsoft Fabric serves as a comprehensive analytics solution tailored for enterprises, encompassing a wide spectrum from data movement and data science to real-time analytics and business intelligence. Click here for the comprehensive guide.Read More

Discover how Role of AI in DevOps enhance efficiency, reduce errors, and strengthen security, and automating coding, testing, and deployment. Click here for the comprehensive guide.Read More

Embrace the top 10 DevOps principles for streamlined, secure, and efficient software project delivery with automation and continuous deployment. Click here for the comprehensive guide. Read more

Implementing DevOps strategies for multicloud environments, optimizing agility, security, and scalability across multiple cloud providers. Click here for the comprehensive guide.Read more