Contents

Introduction

AI product development is the art of crafting intelligent systems that emulate human-like cognitive functions. Neglecting key considerations in this intricate process can compromise brand integrity and user trust. It’s imperative to navigate ethical, technical, and user-centric challenges thoughtfully, as overlooking these critical facets may not only hinder success but also lead to unintended consequences for both the company and its users. In this blog, we’ll explore the pivotal factors that demand careful consideration in generative AI product development.

Ethical Considerations

In AI product development, ethical considerations are paramount. Developers must prioritize responsible AI practices, ensuring their models do not generate content that promotes hate speech, discrimination, or harm. Implementing ethical content moderation mechanisms to filter out offensive and inappropriate output is imperative. Disclosing the AI’s limitations and potential biases is crucial to gaining user trust. Respecting user privacy by minimizing data collection and providing clear consent mechanisms is essential. Following ethical guidelines safeguards users and the company and contributes to the responsible advancement of AI technology in alignment with societal values.

Legal Considerations

Cross-checking copyrighted content is pivotal in AI product development. Rigorous measures must be implemented to steer clear of copyright infringement when generating text, images, or any form of creative output. Developers need to be vigilant, respecting the rights of content creators and securing appropriate permissions for usage. Implementing content filters, licensing agreements, and monitoring tools can help safeguard against unauthorized reproduction. Awareness of fair use principles and staying abreast of evolving copyright laws are crucial. Respect for trademarks is crucial to preventing unauthorized use of protected logos or brand elements. Compliance with intellectual property laws, such as patent regulations, is vital.

The New York Times sued OpenAI and Microsoft for copyright infringement, a new front in the debate over the use of published work to train AI. https://t.co/u8qZ247dCl

— The New York Times (@nytimes) December 27, 2023

Tone of Output

The tone of output is a critical aspect of generative AI product development, influencing user engagement and experience. Navigating the tone, whether persuasive, informative, entertaining, or influencing, shapes the product’s effectiveness. Persuasive tones can drive user actions; informative tones enhance understanding; and entertaining tones captivate audiences. Influencing tones guide opinions and decisions. Striking the right balance ensures alignment with user expectations and application objectives. A thoughtful consideration of tone contributes significantly to the product’s impact, making it more adaptable and appealing across diverse contexts. Taking this strategic decision ultimately impacts the product as we do not know the purpose of the user.

Deepfakes

It’s imperative to conscientiously steer away from endorsing deepfakes and defamatory visuals in AI product development. Emphasizing ethical considerations is paramount to preventing misuse and potential harm. Prioritize user education on responsible AI use, promoting transparency and accountability. Vigilance against the creation or dissemination of deceptive content should be embedded in product design and user guidelines. Encourage positive applications of generative AI while discouraging malicious intent. A vigilant stance ensures that the technology contributes positively to society, fostering trust and safeguarding against the negative repercussions associated with deepfakes and unethical use of AI-generated content.

Accuracy in AI Product Development

Accuracy is paramount, as it directly influences user trust in AI product development. As a text-based probability, it reflects the model’s reliability in generating coherent and contextually relevant content. Misleading users compromises their trust, emphasizing the importance of refining accuracy. A high level of accuracy ensures that the generated outputs align with user expectations, fostering a positive user experience. Striking the right balance between creativity and precision is crucial to maintaining credibility by not producing uncredible information. Transparent communication about the model’s capabilities and limitations builds user confidence, reinforcing the ethical responsibility of developers to prioritize accuracy in creating a trustworthy generative AI product.

Watch the Video to understand the Challenges and Governance of AI

Source Credibility

Prioritizing source credibility is paramount. Rigorous assessment of data origin ensures ethical and legal compliance. Questions such as “Where are you getting data from?” and “Is it obtained through legal means?” become pivotal in AI product development. Upholding data integrity safeguards against misinformation and biased outputs. Employing reliable sources enhances the model’s accuracy and trustworthiness. Scrutinizing data provenance promotes responsible AI development, mitigates potential biases, and reinforces user confidence. Prioritizing source credibility not only aligns with ethical standards but also fortifies the robustness and reliability of generative AI products.

Back in May 2023, Twitter alleges Microsoft breached a data agreement and used its data to train AI technologies in a letter to CEO Satya Nadella. (Source: New York Times Article)

Sustainability

In AI product development, sustainability is paramount, demanding scrutiny of large language models’ (LLMs) environmental impact. Prioritizing energy and water consumption analysis is crucial, with efforts directed at algorithm optimization, energy-efficient hardware exploration, and eco-friendly practices. Forbes reports that University of Washington research reveals ChatGPT’s queries cost the energy of 33,000 U.S. households daily. According to a University of California, Riverside researcher cited in the same article, ChatGPT consumes 500 ml of water for every 5 to 50 prompts, contributing to Microsoft’s 34% surge in global water usage from 2021 to 2022, reaching nearly 1.7 billion gallons. This underscores the need for eco-conscious advancements in AI.

Human Involvement

Integrating human involvement is critical in AI product development. While AI excels at processing data and generating outputs, it lacks an understanding of human emotions and needs. Incorporating human insight becomes crucial, ensuring a product aligns with user expectations and contexts. Software devoid of human perspective might overlook the intricacies of user interaction scenarios. By involving humans in the development process, particularly in comprehending user emotions and requirements, a more empathetic and user-centric AI product can emerge, addressing the unpredictability of how users will employ the generated output. It is an indispensable step to bridge the gap between technical capabilities and genuine user satisfaction.

Ensure efficiency, not laziness

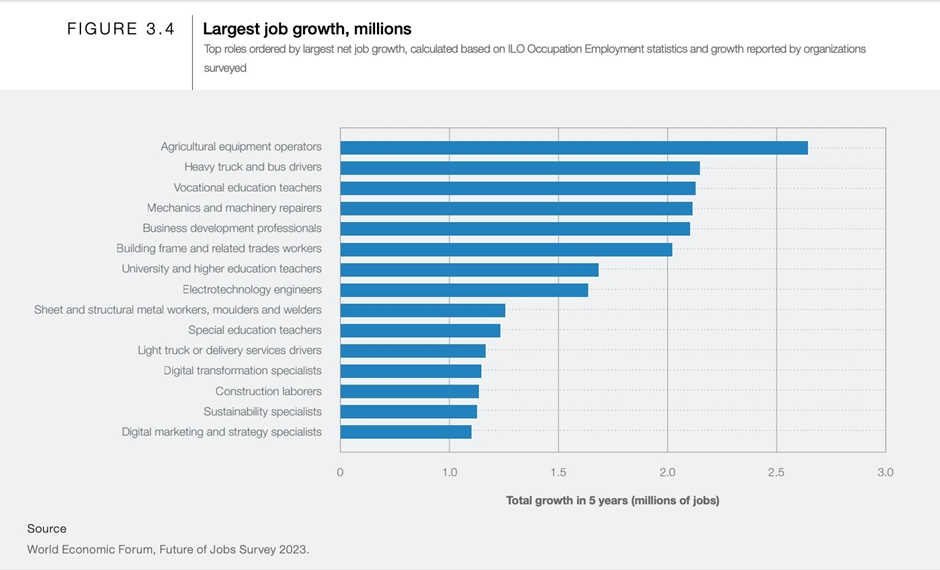

In AI product development, it’s essential to prioritize efficiency over laziness. We must ensure that our product doesn’t inadvertently mislead users. It’s a good practice to encourage users to cross-check and customize generated content, promoting a sense of accountability. Considering the significant job displacement caused by AI, with the World Economic Forum projecting 85 million jobs at risk by 2025 and some arguing AI is fostering employment in this field, it’s evident that a delicate balance is needed. The ongoing debate on whether AI leads to unemployment or fosters employment underscores the complexity of the issue. Striking this balance is crucial for responsible AI product development, mitigating potential negative impacts on employment.

Source: Jobs that AI cannot easily replace according to World Economic Forum

Validation

Validation is crucial due to diverse regulations worldwide. For a globally released product, balancing user preferences, cultural nuances, and pricing is essential. Ensuring compliance with varying legal frameworks is vital, as regulations differ across countries. Moreover, validating output becomes challenging as the product’s use cases are unpredictable. Understanding how and where users apply the generated content is crucial for refining the model. Implementing robust testing methodologies, obtaining user feedback, and staying adaptable to evolving user needs are integral components of effective validation in generative AI product development.

Conclusion

It’s beneficial to integrate AI into workflows and develop AI-based products, but equally paramount is a comprehensive understanding of the outlined parameters. We’ve highlighted numerous crucial considerations for your AI product development. Sparity can be your expert partner whether you’re integrating AI into your firm or aiming to create an AI-based tool. We can assist you in meeting all the above mentioned requirements during the design and development of your AI tool.