Artificial intelligence is advancing at record speed, but turning experiments into reliable, scalable business outcomes is still one of the biggest challenges for organizations. As models grow more complex and deployment environments shift across cloud and hybrid architectures, teams need a framework that keeps everything consistent.

That’s exactly where MLflow comes in.

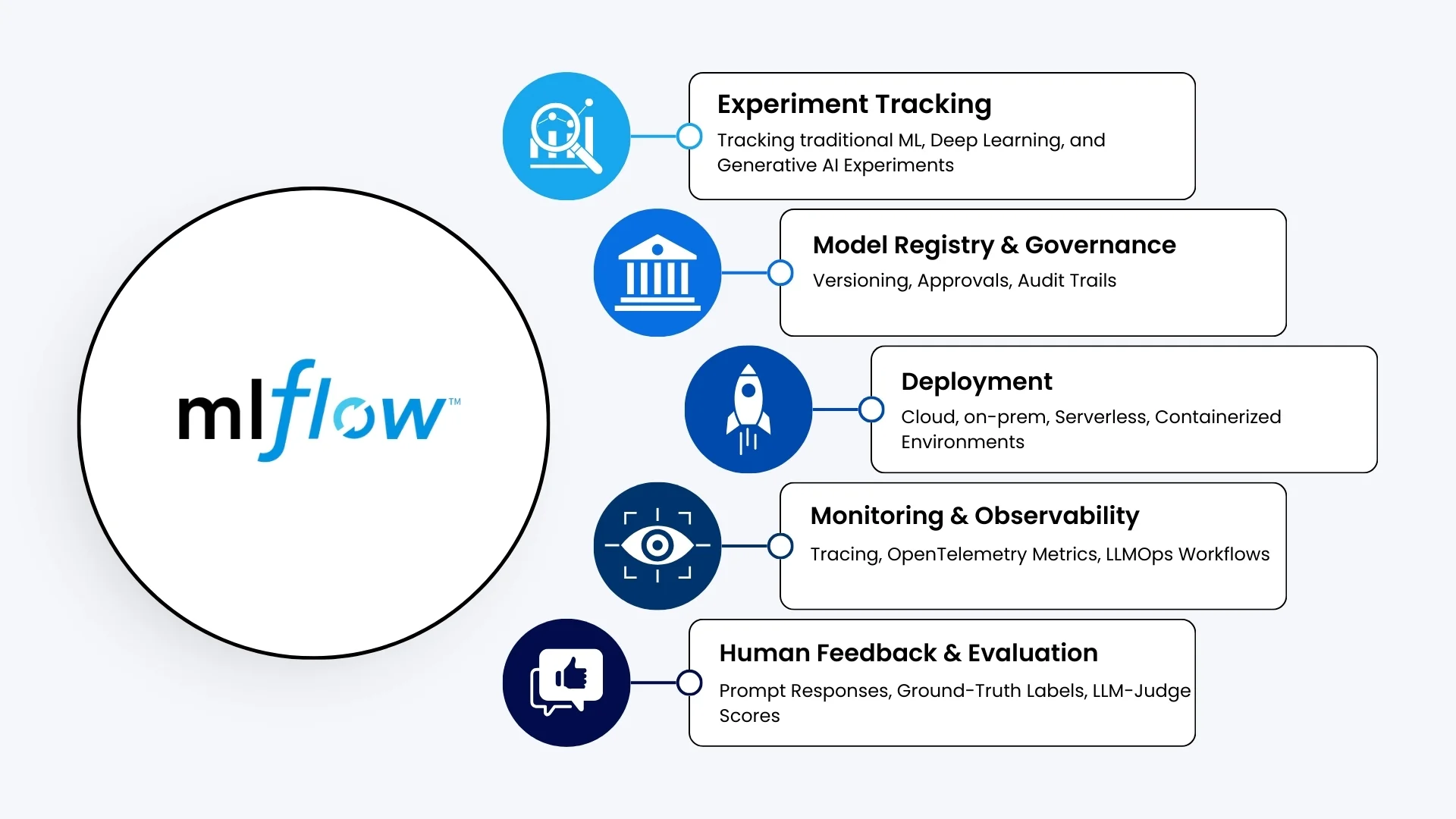

MLflow is an open-source platform, purpose-built to assist machine learning practitioners and teams in handling the complexities of the machine learning process. It focuses on the full lifecycle for machine learning projects, ensuring that each phase is manageable, traceable, and reproducible.

MLflow has become the foundation for MLOps, with over 30 million monthly downloads and contributions from over 850 developers worldwide powering ML and deep learning workloads for thousands of enterprises.

MLflow 3.x: Unified Platform for All AI Workloads

MLflow 3.x has evolved into a truly unified platform that supports the full spectrum of AI from traditional machine learning and deep learning to production-ready generative AI. The latest release brings a smart new abstraction layer for handling AI assets, making sure every model whether a classic ML algorithm or a GenAI application follows the same patterns for lineage, tracking, and deployment.

Table of Contents

- MLflow 3.x: Unified Platform for All AI Workloads

- But, what’s New in MLflow 3.0?

- Enterprise-Grade Model Registry and Governance

- Generative AI and LLMOps with MLflow 3

- Observability, Tracing, and Agent Workflows

- Automation, Deployment, and Databricks Integration

- MLflow in the 2025 MLOps Stack

- What Enterprises Gain With MLflow 3.0

- The Future of Enterprise AI with MLflow

- How Sparity Can Help You Leverage MLflow

But, what’s New in MLflow 3.0?

With the release of MLflow 3.0, the platform has taken a major leap forward. These are the upgrades that make it significant-

- Unified AI support: MLflow 3.0 which previously supported traditional ML and deep learning has extended to generative-AI applications and AI agents. This makes it easy for your teams to focus on single tool for different workloads.

- Enhanced versioning & tracing for GenAI: LoggedModel the new metadata entity connects models/agents to exact code, prompt versions, evaluation runs, and deployment metadata — ensuring full traceability and reproducibility.

- Cross-workload evaluation & observability: MLflow offers a unified evaluation and monitoring framework that ensures consistent quality control and comparison across workloads. This feature is particularly useful if you are training a classifier or fine-tuning an LLM.

- Simplified deployment workflows: With improved model registry and deployment workflows, production-grade deployment (cloud, on-prem, edge) becomes easier reducing friction from experimentation to product.

Enterprise-Grade Model Registry and Governance

MLflow’s Model Registry has evolved from being a storage location to a governance hub. The MLflow platform now supports evaluation and monitoring AI agents.

“We’re open-sourcing a huge swath of our evaluation capabilities into MLflow,” said Craig Wiley, senior director of product for AI and machine learning at Databricks.

This evolution introduces stronger operational guardrails. Support for webhooks and automated registry events enables seamless integration with CI/CD pipelines, approval processes, and alerting systems. Teams gain tighter oversight when promoting models from experimentation to staging and production ensuring every action is tracked and governed.

Robust evaluation frameworks are especially crucial for organizations deploying agents in customer-facing environments. Continuous evaluation helps confirm that agents deliver reliable, accurate, and trustworthy responses while also monitoring for fairness, bias, and robustness.

Generative AI and LLMOps with MLflow 3

The most important shift in 2025 is MLflow’s expanded focus on generative AI and LLM-driven workloads. MLflow 3 includes dedicated capabilities for logging GenAI application behavior — from prompts and responses to traces and evaluation outcomes. This brings deeper visibility into how LLM systems perform and evolve over time.

The platform now also supports tracking human feedback, ground-truth references, and even LLM-judge scores directly within the workflow. These insights are essential for continuously improving generative AI applications at scale.

For Sparity, this advancement means LLMOps pipelines including RAG-based solutions, enterprise assistants, and multi-step AI agents can now be developed, deployed, and monitored using the same familiar MLflow framework used for traditional machine learning. This alignment enables faster adoption and governance without introducing new operational complexity.

Observability, Tracing, and Agent Workflows

AI observability is now a core focus in MLflow’s roadmap and recent releases. The platform provides robust tracing support for generative AI applications across multiple environments and SDKs, including TypeScript and popular orchestration frameworks. Additionally, it integrates with tools like Semantic Kernel to capture complex agent workflows, enabling a comprehensive view of system behavior.

The latest MLflow versions also support OpenTelemetry-compatible metrics export. This allows span-level statistics from MLflow traces to feed directly into enterprise monitoring systems.

Automation, Deployment, and Databricks Integration

MLflow now offers expanded support for deploying both traditional models and generative AI applications across serverless, containerized, and managed environments. When paired with Databricks, MLflow serves as the central backbone for unified MLOps workflows: models are trained on Lakehouse data, tracked in MLflow, registered with governance controls, and deployed through managed serving or external endpoints.

The integration with Databricks Workflows and Model Serving further automates the journey from experimentation to production. Teams benefit from built-in monitoring, automated promotion, and rollback capabilities, ensuring reliable, production-grade deployment with minimal operational overhead.

MLflow in the 2025 MLOps Stack

Industry roadmaps for MLOps in 2025 consistently position MLflow as a foundational element of the modern AI ecosystem. Alongside tools for versioning, orchestration, deployment, and monitoring, MLflow serves as the central layer that keeps experiments, models, and generative AI applications organized, reproducible, and consistent across diverse environments. Its role as a unifying platform ensures teams can manage the full lifecycle of AI workloads without fragmentation or loss of traceability.

What Enterprises Gain With MLflow 3.0

MLflow 3.0 ia a a step change in how enterprises operate AI. By bringing traditional ML, deep learning, and generative AI into a single lifecycle, organizations benefit from:

• One unified platform for every workload

No more fragmented tooling or parallel processes. Teams work faster with fewer operational hurdles.

• Stronger governance and compliance

Every model, prompt, and agent action is tracked and auditable, which is essential for regulated industries and high-stakes deployments.

• Faster experimentation

Improved automation and deployment workflows reduce the time spent moving models from notebooks to real users.

• Enhanced model quality and trust

Continuous evaluation ensures AI systems perform reliably in real-world scenarios — including fairness and robustness checks.

• Operational efficiency and cost savings

Standardized workflows and reduced re-engineering eliminate tool sprawl and lower MLOps overheads.

• Scalable LLMOps

Native support for GenAI pipelines means enterprises can innovate with large language models, multimodal assistants, and agents without reinventing operations.

The Future of Enterprise AI with MLflow

As AI systems grow more autonomous and interconnected, MLflow’s unified framework will continue to be the cornerstone for responsible, scalable, and auditable AI. From real-time LLMOps to multi-agent orchestration and cross-domain AI solutions, organizations that standardize on MLflow today will be better positioned to innovate tomorrow.

How Sparity Can Help You Leverage MLflow

With MLflow’s evolution into a full AI operations platform, enterprises need a strategic partner to design and implement the right architecture, governance, and workflows on top of it. Sparity can help by:

- Designing MLflow-centered MLOps architectures on Databricks Lakehouse and cloud platforms, covering both traditional ML and GenAI use cases.

- Implementing end-to-end pipelines for experiment tracking, registry workflows, deployment jobs, and AI observability based on the latest MLflow 3.x capabilities.

- Building LLMOps and GenAI solutions such as domain-specific assistants, AI agents, and multimodal workflows using MLflow’s tracing, feedback tracking, and governance features.

Ready to unlock enterprise-grade AI with MLflow 3.x? Partner with Sparity to build, deploy, and monitor your ML and GenAI pipelines all under one unified, governed platform.